SimLauncher: Launching Sample-Efficient Real-world Robotic Reinforcement Learning via Simulation Pre-training

IROS 2025 (Oral Presentation)

IROS 2025 (Oral Presentation)

Recent advances in robotic reinforcement learning (RL) have demonstrated remarkable performance and robustness in realworld visuomotor control tasks. However, real-world RL faces the challenge of slow exploration, and therefore requires lots of human demonstrations or interventions to guide exploration. In contrast, simulators offer a safe and efficient environment for extensive exploration and data collection, while the visual sim-to-real gap, often a limiting factor, can be mitigated using real-to-sim techniques.

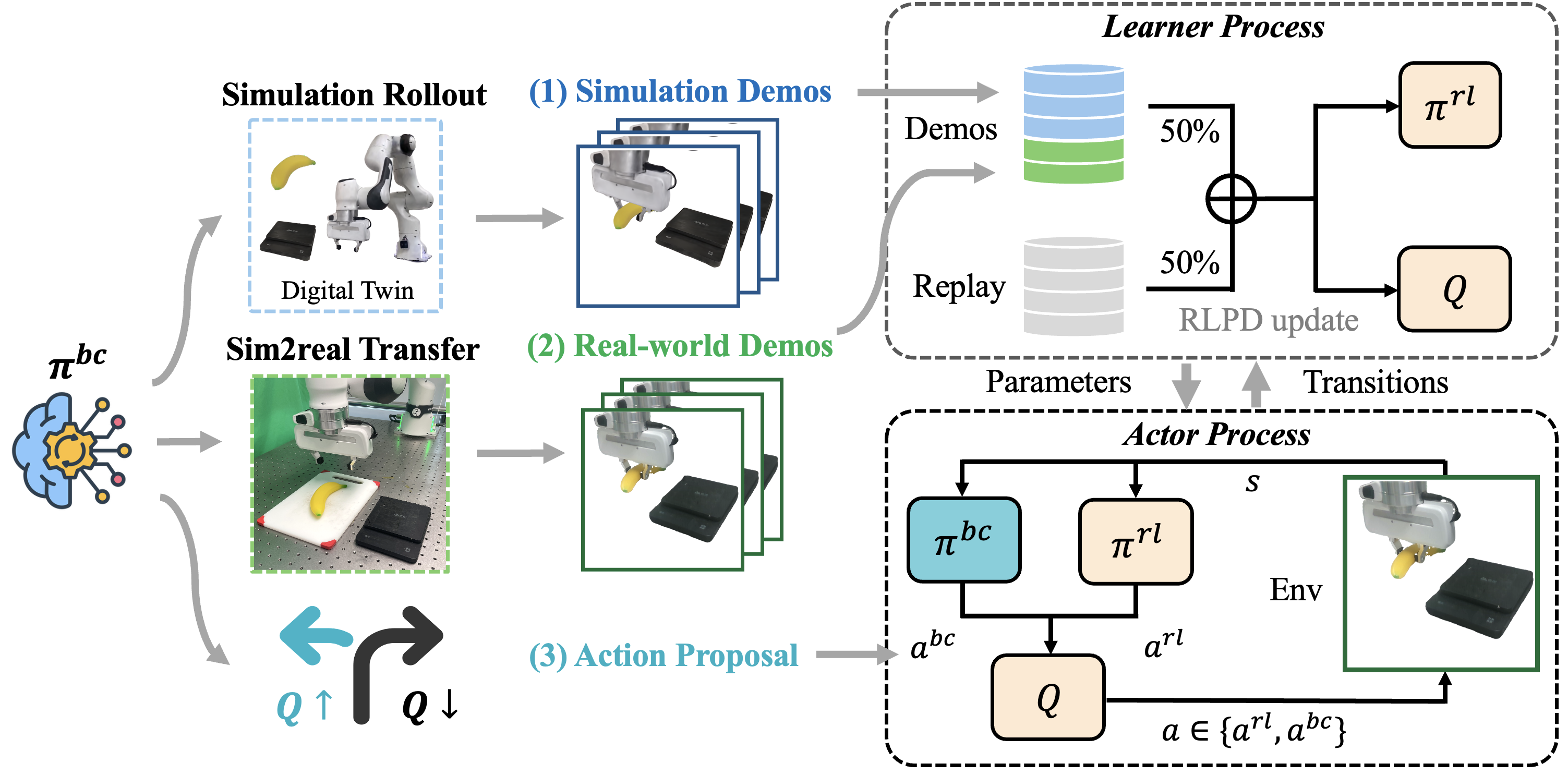

Building on these, we propose SimLauncher, a novel framework that combines the strengths of

real-world RL and real-to-sim-to-real approaches to overcome these challenges.

Specifically, we first pre-train a visuomotor

policy in the digital twin simulation environment, which then

benefits real-world RL in two ways:

(1) bootstrapping target values using extensive simulated demonstrations and real-world demonstrations derived from pre-trained policy rollouts,

(2) incorporating action proposals from the pre-trained policy for better exploration.

Compared to prior real-world RL approaches, SimLauncher significantly improves sample efficiency and achieves near-perfect success rates. We hope this work serves as a proof of concept and inspires further research on leveraging large-scale simulation pre-training to benefit real-world robotic RL.

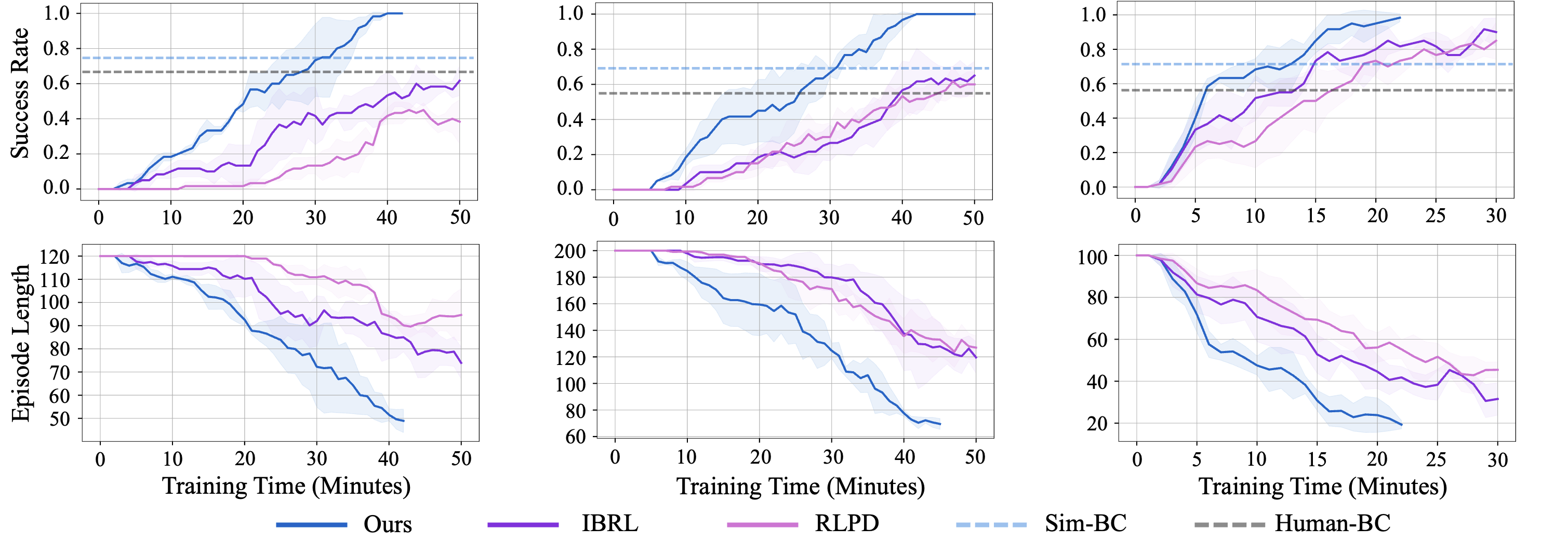

We show the complete training process and curves of SimLauncher on 3 tasks. The curves are averaged over 3 runs.

Speedup from IBRL (best human-demo-only RL method) to SimLauncher:

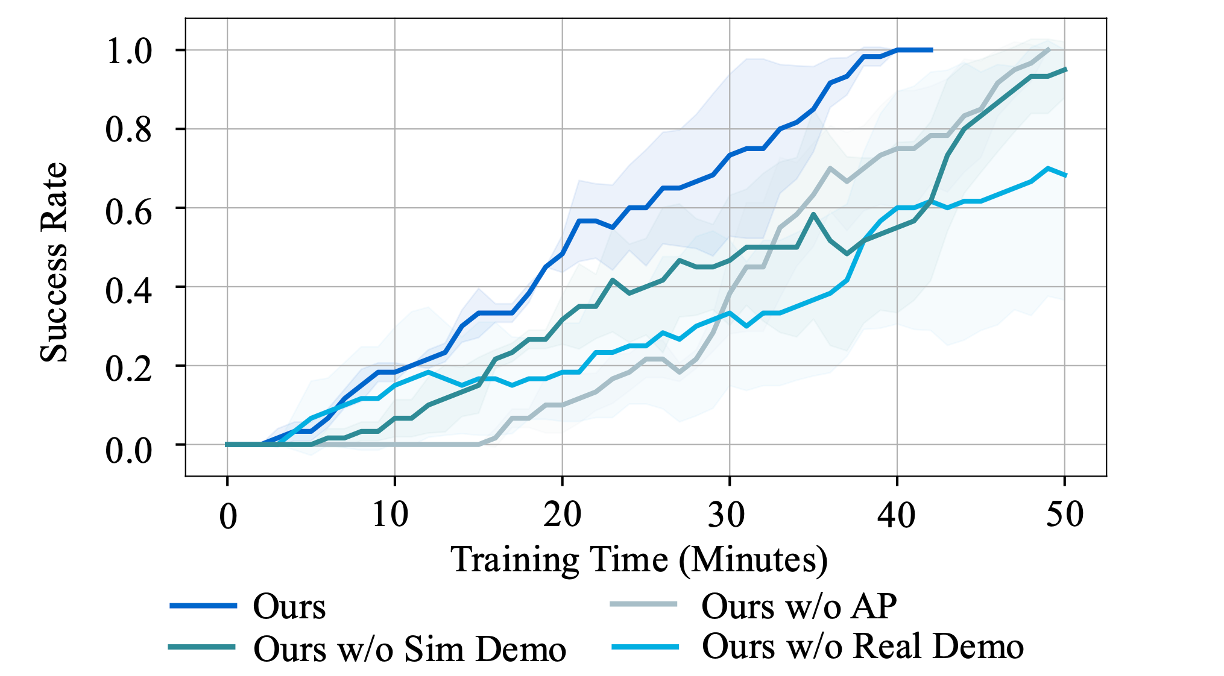

Removing any of our key components - sim demos, real demos, and action proposal - resulted in a performance drop, which indicates all 3 components are effective.

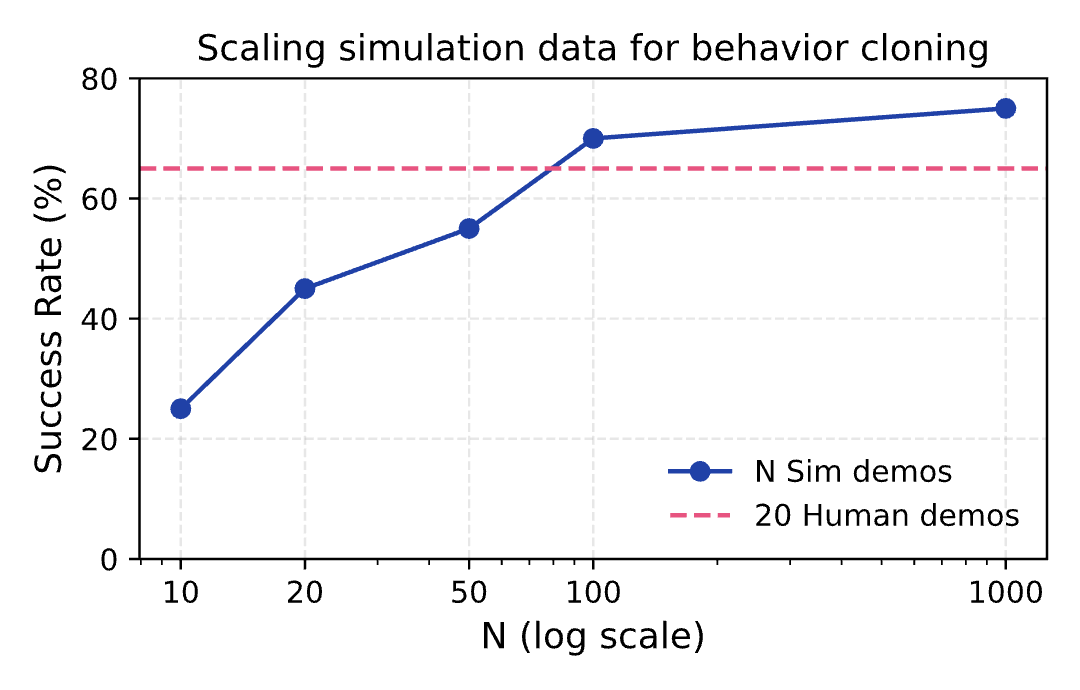

Takeaway 1: Scaling simulation pre-training improves sim-to-real policy transfer.

We train behavior cloning policies with different number of sim demos.

Not surprisingly, BC with real demos is better than BC with sim demos when the number of demos is controlled, likely due to the sim-to-real gap.

However, as we scale up sim demos, which is very efficient, it outperforms BC with real demos.

This suggests that scaling simulation pre-training does improve sim-to-real policy transfer.

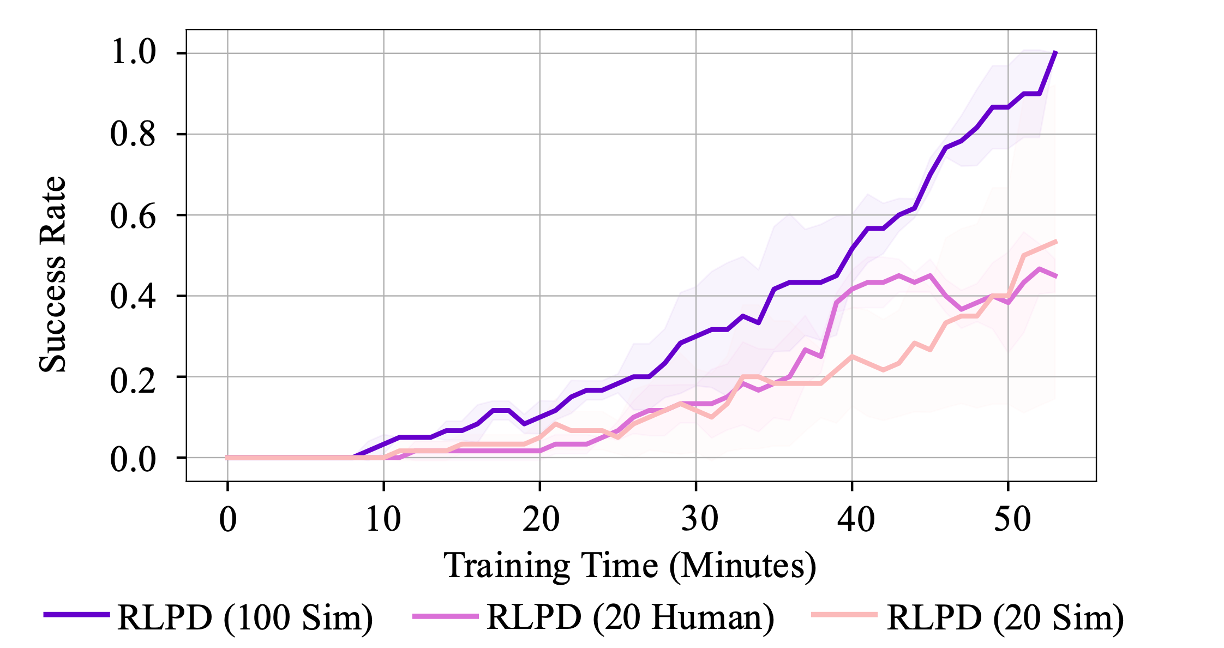

Takeaway 2: Simulated demonstrations alone enable effective bootstrapping.

A key concern when using sim demos for bootstrapping is that the critic might overfit to simulation-specific features, allowing it to distinguish between sim demos and the real-world replay buffer.

Despite this potential pitfall, we surprisingly find that in our pick-and-place task, using sim demos alone can launch the RL training.

For optimal performance, however, our ablation study suggests combining sim demos with a small number of real demos.

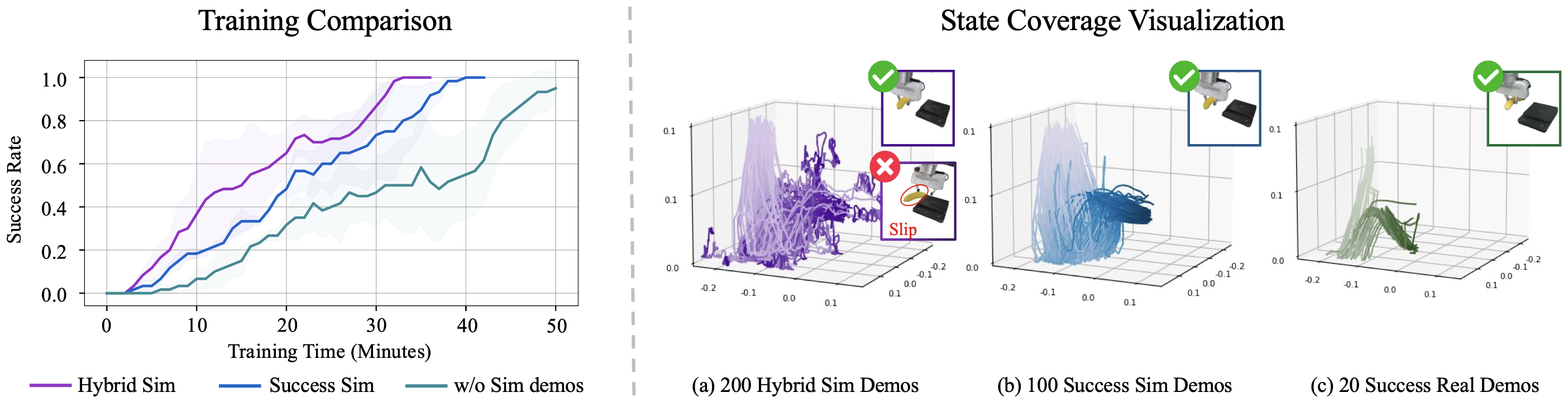

Takeaway 3: Expanding state coverage in sim demos enhances bootstrapping.

Since we observe that increasing the number of sim demos can improve sample efficiency, we wonder if increasing the state coverage can further improve sample efficiency.

To investigate this, we construct a hybrid demo buffer by collecting rollouts uniformly throughout the sim policy training process, containing both successful and failed rollouts. This approach provides better state coverage.

Using the hybrid demo buffer leads to slightly improved sample efficiency, suggesting that expanding state coverage in sim demos can further enhance bootstrapping.

@article{wu2025simlauncher,

author = {Mingdong Wu and Lehong Wu and Yizhuo Wu and Weiyao Huang and Hongwei Fan and Zheyuan Hu and Haoran Geng and Jinzhou Li and Jiahe Ying and Long Yang and Yuanpei Chen and Hao Dong},

title = {SimLauncher: Launching Sample-Efficient Real-world Robotic Reinforcement Learning via Simulation Pre-training},

journal = {IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

year = {2025},

}